How to hire AI engineers

If you’re leading a team that uses AI in your product in some way, you probably need to hire AI engineers. As defined in this article, that’s someone with conventional engineering skills in addition to knowledge of language models and prompt engineering, without being a full-fledged Machine Learning expert.

But how do you hire someone with this skillset? At Elicit we’ve been applying machine learning to reasoning tools since 2018, and our technical team is a mix of ML experts and what we can now call AI engineers. This article will cover our process from job description through interviewing. (You can also flip the perspectives here and use it just as easily for how to get hired as an AI engineer!)

My own journey

Before getting into the brass tacks, I want to share my journey to becoming an AI engineer.

Up until a few years ago, I was happily working my job as an engineering manager of a big team at a late-stage startup. Like many, I was tracking the rapid increase in AI capabilities stemming from the deep learning revolution, but it was the release of GPT-3 in 2020 which was the watershed moment. At the time, we were all blown away by how the model could string together coherent sentences on demand. (Oh how far we’ve come since then!)

I’d been a professional software engineer for nearly 15 years—enough to have experienced one or two technology cycles—but I could see this was something categorically new. I found this simultaneously exciting and somewhat disconcerting. I knew I wanted to dive into this world, but it seemed like the only path was going back to school for a master’s degree in Machine Learning. I started talking with my boss about options for taking a sabbatical or doing a part-time distance learning degree.

In 2021, I instead decided to launch a startup focused on productizing new research ideas on ML interpretability. It was through that process that I reached out to Andreas—a leading ML researcher and founder of Elicit—to see if he would be an advisor. Over the next few months, I learned more about Elicit: that they were trying to apply these fascinating technologies to the real-world problems of science, and with a business model that aligned it with safety goals. I realized that I was way more excited about Elicit than I was about my own startup ideas, and wrote about my motivations at the time.

Three years later, it’s clear this was a seismic shift in my career on the scale of when I chose to leave my comfy engineering job at IBM to go through the Y Combinator program back in 2008. Working with this new breed of technology has been more intellectually stimulating, challenging, and rewarding than I could have imagined.

Deep ML expertise not required

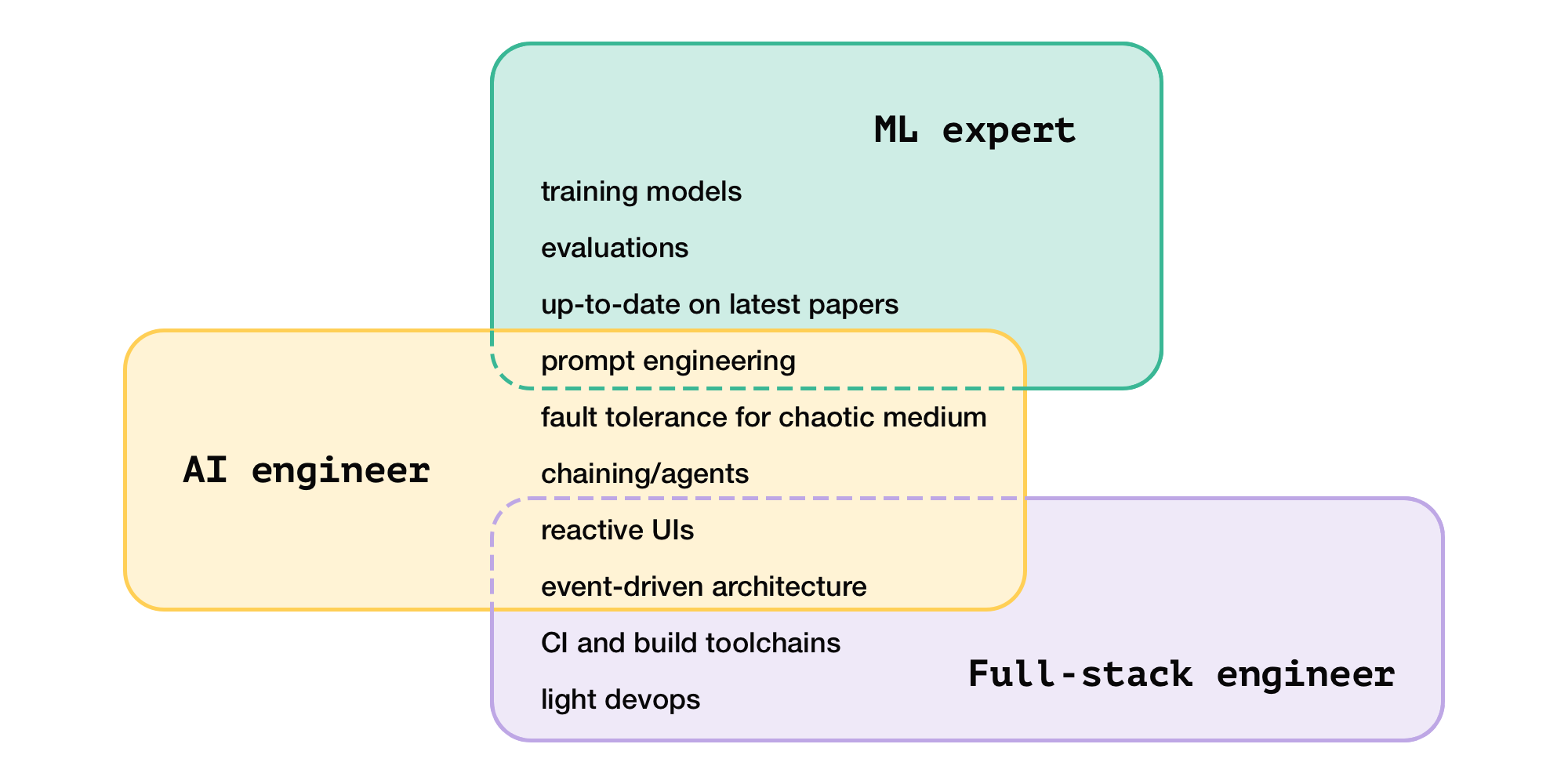

It’s important to note that AI engineers are not ML experts, nor is that their best contribution to a tech team.

In our article Living documents as an AI UX pattern, we wrote:

It’s easy to think that AI advancements are all about training and applying new models, and certainly this is a huge part of our work in the ML team at Elicit. But those of us working in the UX part of the team believe that we have a big contribution to make in how AI is applied to end-user problems.

We think of LLMs as a new medium to work with, one that we’ve barely begun to grasp the contours of. New computing mediums like GUIs in the 1980s, web/cloud in the 90s and 2000s, and multitouch smartphones in the 2000s/2010s opened a whole new era of engineering and design practices. So too will LLMs open new frontiers for our work in the coming decade.

To compare to the early era of mobile development: great iOS developers didn’t require a detailed understanding of the physics of capacitive touchscreens. But they did need to know the capabilities and limitations of a multi-touch screen, the constrained CPU and storage available, the context in which the user is using it (very different from a webpage or desktop computer), etc.

In the same way, an AI engineer needs to work with LLMs as a medium that is fundamentally different from other compute mediums. That means an interest in the ML side of things, whether through their own self-study, tinkering with prompts and model fine-tuning, or following along in #llm-paper-club. But this understanding is so that they can work with the medium effectively versus, say, spending their days training new models.

Language models as a chaotic medium

So if we’re not expecting deep ML expertise from AI engineers, what are we expecting? This brings us to what makes LLMs different.

We’ll assume already that our ideal candidate is already inspired by, and full of ideas about, all the new capabilities AI can bring to software products.

But the flip side is all the things that make this new medium difficult to work with. LLM calls are annoying due to high latency (measured in tens of seconds sometimes, rather than milliseconds), extreme variance on latency, high error rates even under normal operation. Not to mention getting extremely different answers to the same prompt provided to the same model on two subsequent calls!

The net effect is that an AI engineer, even working at the application development level, needs to have a skillset comparable to distributed systems engineering. Handling errors, retries, asynchronous calls, streaming responses, parallelizing and recombining model calls, the halting problem, and fallbacks are just some of the day-in-the-life of an AI engineer. Chaos engineering gets new life in the era of AI.

Skills and qualities in candidates

Let’s put together what we don’t need (deep ML expertise) with what we do (work with capabilities and limitations of the medium). Thus we start to see what Elicit looks for in AI engineers:

- Conventional software engineering skills. Especially back-end engineering on complex, data-intensive applications.

- Professional, real-world experience with applications at scale.

- Deep, hands-on experience across a few back-end web frameworks.

- Light devops and an understanding of infrastructure best practices.

- Queues, message buses, event-driven and serverless architectures, … there’s no single “correct” approach, but having a deep toolbox to draw from is very important.

- A genuine curiosity and enthusiasm for the capabilities of language models.

- One or more serious projects (side projects are fine) of using them in interesting ways on a unique domain.

- …ideally with some level of factored cognition, e.g. breaking the problem down into chunks, making thoughtful decisions about which things to push to the language model and which stay within the realm of conventional heuristics and compute capabilities.

- Personal studying with resources like Elicit’s ML reading list. Part of the role is collaborating with the ML engineers and researchers on our team. To do so, the candidate needs to “speak their language” somewhat, just as a mobile engineer needs some familiarity with backends in order to collaborate effectively on API creation with backend engineers.

- An understanding of the challenges that come along with working with large models (high latency, variance, etc.) leading to a defensive, fault-first mindset.

- Careful and principled handling of error cases, asynchronous code (and ability to reason about and debug it), streaming data, caching, logging and analytics for understanding behavior in production.

- This is a similar mindset that one can develop working on conventional apps which are complex, data-intensive, or large-scale apps. The difference is that an AI engineer will need this mindset even when working on relatively small scales!

On net, a great AI engineer will combine two seemingly contrasting perspectives: knowledge of, and a sense of wonder for, the capabilities of modern ML models; but also the understanding that this is a difficult and imperfect foundation, and the willingness to build resilient and performant systems on top of it.

Here’s the resulting AI engineer job description for Elicit. And here’s a template that you can borrow from for writing your own JD.

Hiring process

Once you know what you’re looking for in an AI engineer, the process is not too different from other technical roles. Here’s how we do it, broken down into two stages: sourcing and interviewing.

Sourcing

We’re primarily looking for people with (1) a familiarity with and interest in ML, and (2) proven experience building complex systems using web technologies. The former is important for culture fit and as an indication that the candidate will be able to do some light prompt engineering as part of their role. The latter is important because language model APIs are built on top of web standards and—as noted above—aren’t always the easiest tools to work with.

Only a handful of people have built complex ML-first apps, but fortunately the two qualities listed above are relatively independent. Perhaps they’ve proven (2) through their professional experience and have some side projects which demonstrate (1).

Talking of side projects, evidence of creative and original prototypes is a huge plus as we’re evaluating candidates. We’ve barely scratched the surface of what’s possible to build with LLMs—even the current generation of models—so candidates who have been willing to dive into crazy “I wonder if it’s possible to…” ideas have a huge advantage.

Interviewing

The hard skills we spend most of our time evaluating during our interview process are in the “building complex systems using web technologies” side of things. We will be checking that the candidate is familiar with asynchronous programming, defensive coding, distributed systems concepts and tools, and display an ability to think about scaling and performance. They needn’t have 10+ years of experience doing this stuff: even junior candidates can display an aptitude and thirst for learning which gives us confidence they’ll be successful tackling the difficult technical challenges we’ll put in front of them.

One anti-pattern—something which makes my heart sink when I hear it from candidates—is that they have no familiarity with ML, but claim that they’re excited to learn about it. The amount of free and easily-accessible resources available is incredible, so a motivated candidate should have already dived into self-study.

Putting all that together, here’s the interview process that we follow for AI engineer candidates:

- 30-minute introductory conversation. Non-technical, explaining the interview process, answering questions, understanding the candidate’s career path and goals.

- 60-minute technical interview. This is a coding exercise, where we play product manager and the candidate is making changes to a little web app. Here are some examples of topics we might hit upon through that exercise:

- Update API endpoints to include extra metadata. Think about appropriate data types. Stub out frontend code to accept the new data.

- Convert a synchronous REST API to an asynchronous streaming endpoint.

- Cancellation of asynchronous work when a user closes their tab.

- Choose an appropriate data structure to represent the pending, active, and completed ML work which is required to service a user request.

- 60–90 minute non-technical interview. Walk through the candidate’s professional experience, identifying high and low points, getting a grasp of what kinds of challenges and environments they thrive in.

- On-site interviews. Half a day in our office in Oakland, meeting as much of the team as possible: more technical and non-technical conversations.

The frontier is wide open

Although Elicit is perhaps further along than other companies on AI engineering, we also acknowledge that this is a brand-new field whose shape and qualities are only just now starting to form. We’re looking forward to hearing how other companies do this and being part of the conversation as the role evolves.

We’re excited for the AI Engineer World’s Fair as another next step for this emerging subfield.